In July, dozens of Indian Muslim women found their pictures displayed as “deals of the day” on an app that went by the name “Sulli Deals”. The app conducted mock auctions of these women to Hindu men.

“There was no real sale of any kind – the purpose of the app was just to degrade and humiliate,” reported BBC.

Such perverse use of social media for targeted harassment isn’t new.

This kind of harassment is also frequently witnessed on Twitter. There were accounts that took responsibility for “Sulli Deals”. There are also accounts that regularly share pornographic content targeting Indian Muslim women.

Most social media sites ban pornographic content but not Twitter. The micro-blogging website is more accepting of porn. Twitter policy states that users cannot post adult content in live video, profile, header or banner images created for lists. But pornography and other forms of consensually produced adult content can be posted within tweets “provided that this media is marked as sensitive”.

Despite this guideline, unmarked pornographic content flourishes on Twitter. While the platform is used by porn performers to build their brands, Twitter’s inability to distinguish acceptable adult content from non-consensual objectification is hurting India’s Muslim community.

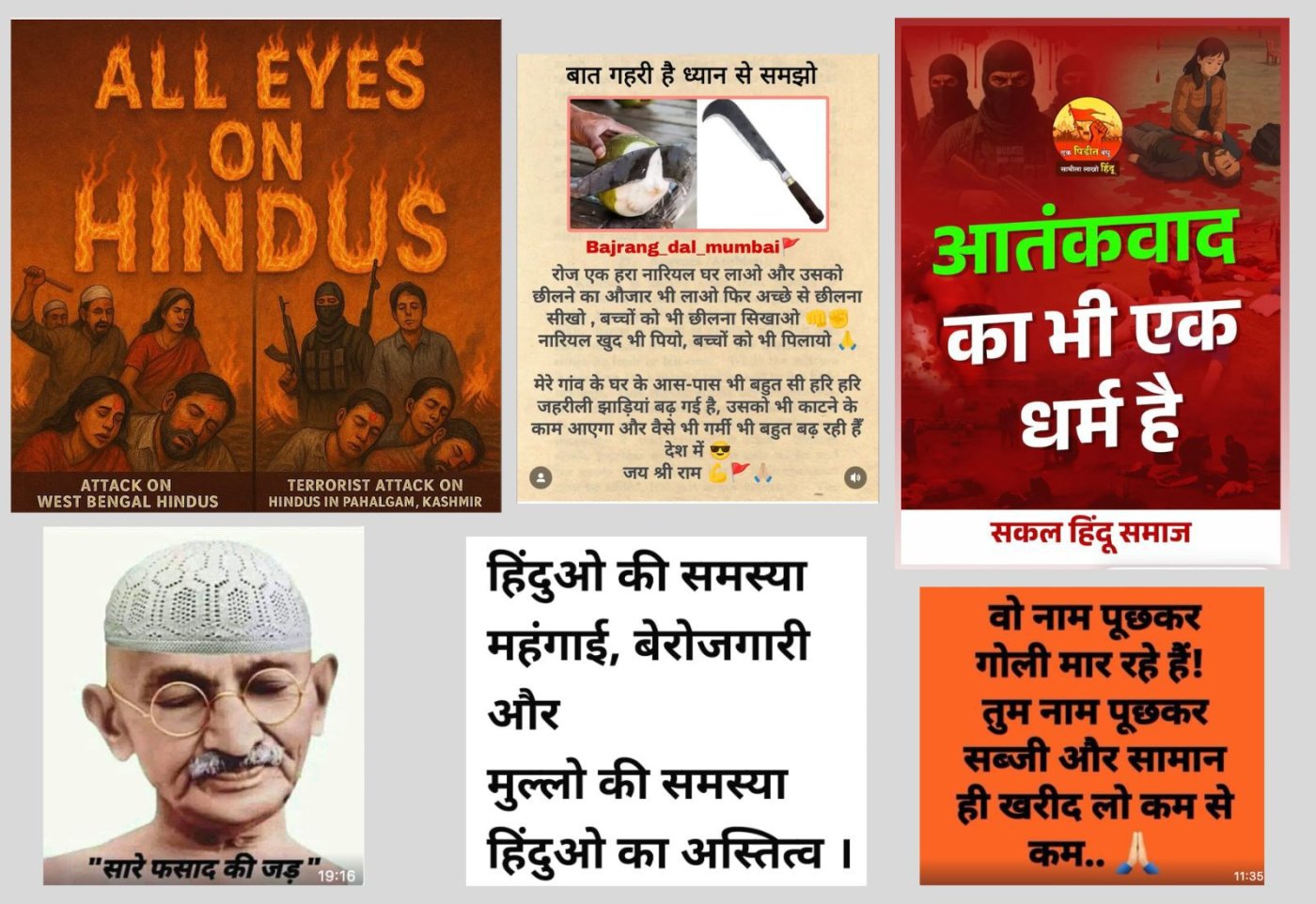

A plethora of handles promoting pornographic content showcasing Muslim women as submissive to Hindu men thrives on the platform. The language they use is filthy, to put it mildly.

In the post below, a handle has shared two pictures of women in hijab – one of them naked and the other offering namaz – and the caption gives a violent description of how Muslim women secretly want “Sanghi” men to have rough, forceful intercourse with them.

This author reported the above Twitter handle while writing this story. The handle was suspended for violating Twitter’s norms.

Another post reported by the author made a reference to the demolition of the Babri Masjid and how Muslim women want Hindu men to “demolish” them in the same way. It was also suspended by Twitter.

There are also tweets that purport to show Muslim women having sex with men identified as members of the Rashtriya Swayamsevak Sangh. The tweets show men “painted saffron” with the word “RSS” imprinted on them. But these are too offensive to be added to the report. Some of the tweets portray Muslim women as wanting to have sex with members of Bharatiya Janata Party and Bajrang Dal. The tweets that were unreported by this author remain on the platform.

Twitter’s policy on ‘non-consensual’ content

Twitter does not give a specific option of reporting oppressive pornographic content. These tweets can be reported under “targeted harassment”.

However, Twitter does give the option of reporting “non-consensual” nudity.

“We put the people who use our service first at every step, and have made progress when it comes to making Twitter a safer place,” wrote a Twitter spokesperson in an email response to questions about the steps taken to tackle the problem of the vulgar representation of Muslim women through pornographic content.

The spokesperson explained: “While we welcome people to express themselves freely on Twitter, we have policies in place to specifically address abuse and harassment, non-consensual nudity and hateful conduct. We take action on accounts that engage in behaviors that violate Twitter rules in line with our range of enforcement options. Under our non-consensual nudity policy we prohibit posting or sharing intimate photos or videos of someone without their consent and is a severe violation of their privacy and the Twitter rules.”

How does Twitter differentiate between consensual and non-consensual porn?

The website’s non-consensual nudity policy allows users to report content that appears to have been taken or shared without a person’s consent. The type of content reportable by anyone is below.

For other types of content Twitter says: “We may need to hear directly from the individual(s) featured (or an authorised representative, such as a lawyer) to ensure that we have sufficient context before taking any enforcement action.”

Twitter’s policy appears to put the burden on users to report violations.

The problems with this policy became clear last year, when a photograph of a semi-naked woman was shared on Twitter with a caption claiming that it was of a Jamia Millia Islamia student named Ladeeda Farzana.

his author wrote a fact-check article about it for Alt News. During the course of the reporting, the photograph was found to be of a woman who was herself a victim of revenge porn. Her ex-boyfriend had published intimate pictures and videos on pornographic websites. One of them had been picked up to target Farzana.

A tweet carrying the image was reported to Twitter by this author but it was not taken down by the platform. This may be because the tweet was not directly reported by the affected person.

In 2017, US television personality Rob Kardashian shared nude photos of his ex-girlfriend, seemingly without her permission, and the pictures remained on Twitter for 30 minutes before they were taken down. It is unclear whether Twitter had stepped in or Kardashian himself had removed the photos. What we do know is that Twitter did not suspend Kardashian’s account.

Given Twitter’s inadequate reaction to purported revenge porn in the West, it is no wonder pictures of the woman used to target Farzana are available on the Indian Twitter ecosystem even more than a year after they were posted.

In another egregious instance, a pornographic video was shared last year to target the women at of Shaheen Bagh protesting the Citizenship Amendment Act . This too is yet to be taken down by Twitter.

However, the platform claims that it has taken steps to contain non-consensual nudity. Twitter’s spokesperson wrote in the email response, “As per the latest Twitter Transparency Center update between July-December 2020, we took enforcement action on 27,087 accounts containing non-consensual nudity, an increase of 194% from the prior reporting period. From July to December 2020, we also saw the largest increase in the number of accounts actioned under this policy.”

The spokesperson added: “Further, we have launched a number of initiatives, like more precise machine learning, to better detect and take action on content that violates our abuse and harassment policy. As a result, globally there was a 142% increase in accounts actioned, compared to the previous reporting period; 964,459 in total. There was also a 77% increase in the number of accounts actioned for violations of our hateful conduct policy during this reporting period globally.”

Bias against non-English languages

A bigger problem is revealed when consensual content is shared with distorted context. For instance, a suggestive video of a married couple from Trinidad and Tobago working out in a gym went viral in India with “love jihad”. Captions in Hindi suggested that the gym had been started by Muslim man to trap Hindu women into entering romantic relationships with him so that he could later force them to convert to Islam.

How will Twitter, assuming that it intends to, take down such posts when the video does not violate its norms? It is the caption that’s objectionable.

Besides, harmful content shared in non-English languages does not seem to undergo equal scrutiny. In 2019, Twitter expanded its hateful conduct policy to prohibit language that dehumanises people on the basis of race, ethnicity, or national origin. Some of the words given as examples are cockroaches, leeches, maggots.

But what about objectionable language in Hindi? There are several posts that use offensive words for Muslim women. They have not been flagged.

Pornographic handles also routinely post pictures of prominent Muslim women – dancer Nora Fatehi, show business personality Sana Khan and Member of Parliament Nusrat Jahan – with obscene captions.

While feminists have long argued that the power dynamics in porn augment societal misogyny, this particular brand of pornography is arguably more problematic.

India’s minority Muslim community is marginalised, discriminated against and demonised. Twitter posts of this kind show how Muslim women are not only attacked for their faith but also for their gender identity.

The imagery claiming that Muslim women want to have violent sex with men who demolished the Babri Masjid is a perverse glorification of the violence and subjucation of their community.

Twitter has not acted against many such accounts because the handles have either not been reported by users or detected by the platform. While Twitter does not ban nudity, it does need better policies to identify porn that is used as a tool to further the oppression of Muslim women.

This story first appeared on scroll.in