“We can keep making messages go viral, whether they are real or fake, sweet or sour.”

– Amit Shah, Home Minister of India, 2018

In late December, Yati Narsinghanand Saraswati, a Hindu temple priest, organized a conference of Hindu extremist leaders in the Indian city of Haridwar. Videos from the event reveal speakers openly calling for genocide against India’s Muslim minority, while officials from India’s Bharatiya Janata Party (BJP) were in the audience.

How did an extremist like Saraswati gain such a following? One answer: Facebook. Saraswati’s videos calling for the extermination of Muslims have been viewed millions of times on the social media platform. Saraswati’s growing prominence, and the Haridwar conference, contributed to Indian journalist Arfa Khanum Sherwani’s comment that “as an Indian Muslim I have never felt as unsafe and alienated as I do today.” In the fall of 2021, testimony and leaked documents by Facebook, now Meta, whistleblower Frances Haugen confirmed what victims of hate speech and violence in India already knew: Facebook has been willfully ignoring widespread disinformation and incitement by Hindu nationalist supporters of Indian Prime Minister Narendra Modi and his BJP party.

With an estimated 340 million Facebook users—more than the entire population of the United States—India has more people on Facebook than any other country. Yet, Haugen’s revelations revealed a stunning lack of attention and resources devoted to tackling hate speech and disinformation in India. The information from Haugen and other whistleblowers further show how Facebook India has allowed for the spread of disinformation and hate speech against Muslims and other minorities, often leading to offline violence.

How Hindu Nationalists Have Weaponized Social Media

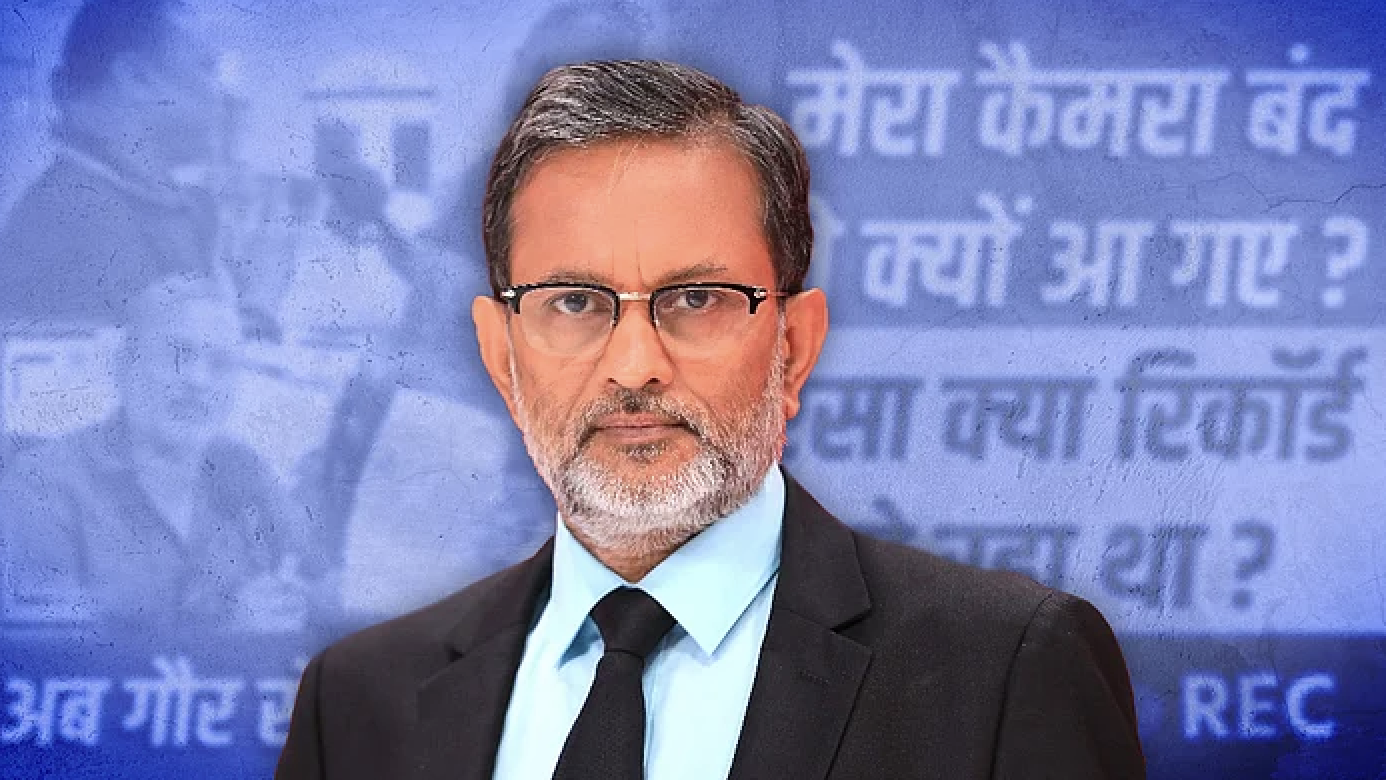

Narendra Modi’s victory in 2014, and his re-election in 2019, owed much to the BJP’s savvy use of social media. A vast network of volunteers work for the BJP’s so-called IT Cell, which churns out posts spreading disinformation and conspiracy theories across Facebook, WhatsApp, and Twitter. In 2018, before Modi’s re-election, BJP President and current Indian Home Minister Amit Shah boasted to these volunteers that: “We can keep making messages go viral, whether they are real or fake, sweet or sour.” Shah further noted, “It is through social media that we have to form governments at the state and national level.”

With an estimated 340 million Facebook users—more than the entire population of the United States—India has more people on Facebook than any other country. Yet, Haugen’s revelations revealed a stunning lack of attention and resources devoted to tackling hate speech and disinformation in India.

Shah also made special mention of WhatsApp (owned by Facebook), noting that in India’s largest state of Uttar Pradesh the BJP sent daily messages to 3.2 million people. Similarly, before the 2020 elections in the state of Bihar, the BJP formed 72,000 WhatsApp groups. The BJP IT Cell isn’t alone; hundreds of other Hindu nationalist groups follow its example and spread hate speech on their own Facebook and WhatsApp groups. On Twitter, Prime Minister Modi has even followed multiple accounts that have spread hateful rhetoric about minorities.

Inaction From the Top

Haugen wasn’t the first Facebook employee to raise disinformation and hate speech in India as an important issue. As revealed in a trove of documents released by Haugen, a Facebook researcher in 2019 created a test user based in Kerala, India and subsequently reported that: “Following this test user’s News Feed, I’ve seen more images of dead people in the past three weeks than I’ve seen in my entire life total.” Facebook researchers had also previously recommended designating the Hindu Nationalist group the Bajrang Dal as a dangerous organization and to ban it from the platform. However, as The Wall Street Journal reported in 2020, Facebook ultimately did not designate the group as a dangerous organization due to concerns over business prospects and staff security.

Unfortunately, efforts to act on these findings have been repeatedly blocked by Facebook’s leadership. As early as May 2019, Indian journalists had pointed out a troubling “revolving door” between Facebook India’s leadership and the BJP in a book titled The Real Face of Facebook in India. Revolving door executives include Shivnath Thukral, who oversaw Modi’s digital strategy in 2014 and is now WhatsApp’s Public Policy Director for India. When questioned by the Delhi Legislative Assembly over Facebook’s role in inciting violence against Muslims in early 2020, Thukral declined to answer several questions, justifying it as exercising his right not to reply. Another executive, Ankhi Das, opposed internal efforts to ban BJP politicians who were spreading disinformation and hate speech on Facebook. Facebook denied accusations that it had shown favor to the BJP and Das left the company in October 2020, following a Wall Street Journal expose.

The connections between the BJP and top Facebook executives underscore the politicization of the social media platform as it breaks into India’s growing market. Facebook officials, for instance, had previously trained BJP IT cell members in the use of the platform. As reported by whistleblower Sophie Zhang, Facebook failed to block fake accounts linked to a BJP member of parliament, even as it took down other similar accounts linked to opposition parties. In other cases, the BJP government has ordered Facebook and Twitter to remove posts that were critical of the government—some cases in which the social media platforms followed suit and some where they did not.

Real World Consequences

The consequences of Facebook’s failure to tackle disinformation has been devastating. The violence against Muslims in Delhi in early 2020 is one example of how hate speech and disinformation can directly lead to mob violence. Facebook’s own internal research documents noted a 300 percent spike in hate speech in the prelude to the Delhi riots. The riots claimed 53 lives, mostly Muslim, and displaced thousands to makeshift camps, where they were then struck by the COVID-19 pandemic.

Similarly, in 2021, Hindu nationalists have reportedly used WhatsApp groups to orchestrate violence against mosques and Muslim-owned businesses and homes in the Northeastern state of Tripura. However, despite the digital footprints left behind by the inciters, the Tripura police have taken no action against them. Instead, they have charged 102 people, many of them journalists, under Modi’s draconian UAPA anti-terrorism law. They were accused of causing “enmity between religious groups,” for merely reporting on the violence and sharing their human concerns on Facebook and Twitter. One of the accused reporters had merely tweeted three words: “Tripura is burning.”

Facebook’s failure to act, and its further efforts to squash even an independent Human Rights Impact Assessment that the company had itself commissioned, signal that the corporation is uninterested in addressing the problem and is actively trying to avoid responsibility.

Digital Colonialism

In recent years, commentators have coined the phrase “digital colonialism” to describe how predominately U.S.-based tech corporations “have amassed trillions of dollars and gained excessive powers to control everything from business to labor to social media and entertainment in the Global South.” Today, Facebook and Google’s ad revenue in India together exceed the revenues of India’s top 10 media firms combined. By prioritizing profits, ad revenue, and entrance into emerging markets over the lives of millions of Indian Muslims, Christians, Dalits, and other marginalized communities, Facebook’s behavior is emblematic of the perils of this new avatar of colonialism.

A more effective path forward would be to identify broader patterns of disinformation and hate speech, and to remove offending individuals and organizations before they incite mass violence.

As Facebook’s user base in India continues to grow, holding social media platforms accountable for misinformation and hate speech becomes all the more pressing. Although there have been occasional instances of Facebook challenging the current government—WhatsApp sued the government in 2021 over privacy issues—the Haugen testimony and other accounts reveal the deeper system-level issues at play that cannot be resolved through isolated efforts or statements. Facebook’s current protocol of responding to complaints at the level of individual pages or posts is inadequate for a country like India, given the sheer volume of traffic on its platforms. A more effective path forward would be to identify broader patterns of disinformation and hate speech, and to remove offending individuals and organizations before they incite mass violence.

Yet, despite a handful of statements from Facebook and its much publicized Oversight Board, it seems unlikely that Facebook will make any significant changes with regard to India, out of fear of upsetting the Modi government. Only sustained public pressure by international human rights and civil society groups, as well as actions from U.S. policymakers, are likely to change Facebook’s devastating disinformation problem and end its role in democratic backsliding in India and across the world.